FinDS

FinDS is a 3-week summer program that is designed to provide high school students a strong base in the Mathematical Foundations of Data Science, with in-person and virtual options available.

FinDS offers two tracks: Beginners and Advanced. Learn more about these two tracks below and visit mathfinds.ucsd.edu for more details.

- June 17, 2024 - July 2, 2024 (Advanced Track) & July 22, 2024 - August 9, 2024 (Level 1 Track)

- CSE Building at UC San Diego, UPenn, or Online

FinDS Tracks

Level 1 Track (Beginner's Program)

The FinDS Level 1 Track is a college preparatory course on developing the mathematic foundation to take on a career in Computer Science and Data Science.

Learn MoreAdvanced Track

The FinDS Advanced Track (Advanced Program) aims to cover topics ranging from Probability to Randomized & Streaming Algorithms, from Linear Algebra & Graph Theory to Google search algorithms, and from Calculus to pervasive ML algorithms.

Learn MoreApplication Requirements

The applications for the FinDS programs are out now! In order to apply, you must have the following:

-

A personal statement in the form of a PDF less than 10MB.

-

An unofficial transcript in the form of a PDF less than 10MB.

-

At least 1 letter of recommendation from a STEM teacher by providing that teacher’s email address. This letter of recommendation needs to be sent in by the application deadline.

The application for the Beginner’s Program can be found here.

The application for the Advanced Program can be found here.

Apply online by May 1, 2024 to be considered for the program.

Past FinDS Programs

Instructors

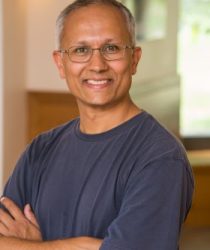

Professor at the University Of pennsylvania, Rutgers University-Camden

Professor Rajiv Gandhi, Professor of Computer Science at Rutgers University-Camden, and lecturer at the University of Pennsylvania. He received his Ph.D. in Computer Science from the University of Maryland in 2003. He also worked as a software engineer at Qualcomm from 1994-96.

His research interests are Algorithm Design, Combinatorial Optimization, and Probabilistic methods. He is a passionate educator and has worked with students with varied backgrounds, and he received the Provost’s Award for Teaching Excellence at Rutgers–Camden in 2006. He received a Fulbright fellowship to teach in Mumbai, India from Jan-May 2011. Prof. Gandhi has also received the 2022 ACM-SIGACT Distinguished Service Award.

Professor at the University of california, san diego

Barna Saha is the E Guber Endowed Chair Associate Professor of UCSD CSE and HDSI. Before joining UCSD, she was an Associate Professor at UC Berkeley. Saha’s primary research focus is on Theoretical Computer Science, specifically Algorithm Design. She is passionate about diversity and teaching, and seeing students succeed from all backgrounds. She is a recipient of the Presidential Early Career Award (PECASE)- the highest honor given by the White House to early career scientists, a Sloan fellowship, an NSF CAREER Award, and multiple paper awards. Learn More.

Professor at the University of California, San Diego and Research Scientist at Meta AI

Kamalika Chaudhuri is a machine learning researcher. She is interested in the foundations of trustworthy machine learning — such as robust machine learning, learning with privacy and out-of-distribution generalization. She received her Ph.D. from University of California Berkeley. Learn More.

Professor at the University of California, San Diego

Sanjoy Dasgupta is a Professor in the Department of Computer Science and Engineering at UC San Diego. He received his PhD from Berkeley in 2000, and spent two years at AT&T Research Labs before joining UCSD. His area of research is algorithmic statistics, with a focus on unsupervised and minimally supervised learning. Learn More.

Professor at the university of california, san diego

Arya Mazumdar is an associate professor of UCSD HDSI, and an affiliate faculty member of Computer Science & Engineering. He obtained his Ph.D. degree from University of Maryland, College Park (2011) specializing in information theory. Subsequently Arya was a postdoctoral scholar at Massachusetts Institute of Technology (2011-2012), an assistant professor in University of Minnesota (2013-2015), and an assistant followed by associate professor in University of Massachusetts Amherst (2015-2021). Arya is a recipient of multiple awards, including a Distinguished Dissertation Award for his Ph.D. thesis (2011), the NSF CAREER award (2015), an EURASIP JSAP Best Paper Award (2020), and the IEEE ISIT Jack K. Wolf Student Paper Award (2010). He is currently serving as an Associate Editor for the IEEE Transactions on Information Theory and as an Area editor for Now Publishers Foundation and Trends in Communication and Information Theory series. Arya’s research interests include coding theory (error-correcting codes and related combinatorics), information theory, statistical learning and distributed optimization. Learn More.

Professor at the University Of California, San Diego

Gal Mishne is an Assistant Professor of HDSI. Mishne’s research is at the intersection of signal processing and machine learning for graph-based modeling, processing and analysis of large-scale high-dimensional real-world data. She develops unsupervised and generalizable methods that allow the data to reveal its own story in an unbiased manner. Her research includes anomaly detection and clustering in remote sensing imagery, manifold learning on multiway data tensors with biomedical applications, and computationally efficient application of spectral methods. Most recently her research has focused on unsupervised data analysis in neuroscience, from processing of raw neuroimaging data through discovery of neural manifolds to visualization of learning in neural networks. Learn More.

Professor at the University of California, San Diego

Yusu Wang is a Professor of HDSI. She obtained her PhD degree from Duke University in 2004, and from 2004 – 2005, she was a post-doctoral fellow at Stanford University. Prior to joining UC San Diego, Yusu Wang was a Professor of Computer Science and Engineering Department at the Ohio State University, where she also co-directed the Foundations of Data Science Research CoP (Community of Practice) at Translational Data Analytics Institute (TDAI@OSU) from 2018–2020. Yusu Wang primarily works in the field of geometric and topological data analysis. She is particularly interested in developing effective and theoretically justified algorithms for data analysis using geometric and topological ideas and methods, as well as in applying them to practical domains. Very recently she has been exploring how to combine geometric and topological ideas with machine learning frameworks for modern data science. Yusu Wang received the Best PhD Dissertation Award from the Computer Science Department at Duke University. She also received DOE Early Career Principal Investigator Award in 2006, and NSF Career Award in 2008. Her work received several best paper awards. She is currently on the editorial boards for SIAM Journal on Computing (SICOMP), Computational Geometry: Theory and Applications (CGTA), and Journal of Computational Geometry (JoCG). Learn More.