EVENTS

EVENTS

Alex Dimakis, a professor at UT Austin and the co-director of the National AI Institute on the Foundations of Machine Learning, will be presenting the talk “Deep Generative Models and Inverse Problems for Signal Reconstruction” on April 1, 2024, as a part of the Foundations of Data Science – Virtual Talk Series.

Register to attend this talk here! The Zoom link will be sent upon registration.

Join the 2-day EnCORE tutorial “Characterizing and Classifying Cell Types of the Brain: An Introduction for Computational Scientists” with Michael Hawrylycz, Ph.D., Investigator, Allen Institute.

This tutorial will take place on March 21 – 22, 2024 at UC San Diego in the Computer Science and Engineering Building in Room 1242. There will be a Zoom option available as well.

Register for this tutorial here.

Learn more about the tutorial by visiting this event page.

Join EnCORE’s talk on “Theoretical Exploration of Foundation Model Adaptation Methods” with Kangwook Lee, an assistant professor in the Electrical and Computer Engineering Department and the Computer Sciences Department at the University of Wisconsin-Madison.

This talk will take place on Friday, February 9, 2024, at UC San Diego, on the 4th floor of Atkinson Hall.

Alexander “Sasha” Rush is an Associate Professor at Cornell Tech, where he studies natural language processing and machine learning. Sasha received his PhD from MIT supervised by Michael Collins and was a postdoc at Facebook NY under Yann LeCun. His research interest is in deep learning text generation, generative modeling, and structured prediction. His group also supports open-source development, including the OpenNMT machine translation system. His research has received several best paper awards at NLP conferences and an NSF Career award.

Learn more about this upcoming talk here.

The NSF TRIPODS Workshop brings together a large and diverse team of researchers from each of the TRIPODS centers: EnCORE, FODSI, IDEAL, and IFDS. The aim of the Transdisciplinary Research In Principles Of Data Science (TRIPODS) is to connect the statistics, mathematics, and theoretical computer science communities to develop the theoretical foundations of data science through integrated research and training activities focused on core algorithmic, mathematical, and statistical principles. This workshop focuses on the contributions of each center’s work and will take place February 1 – 2, 2024.

FinDS is a 3-week summer program that is designed to provide high school students a strong base in the Mathematical Foundations of Data Science, with in-person and virtual options available.

FinDS offers two tracks: Beginners and Advanced. Applications for the two tracks are live now. Learn more about these two tracks below and visit mathfinds.ucsd.edu for more details.

The application for the Beginner’s Program can be found here.

The application for the Advanced Program can be found here.

Apply for the program online by May 1, 2024.

Join EnCORE’s talk on “Tight Bound on Equivalence Testing in Conditional Sampling Model” with Diptarka Chakraborty, an assistant professor at the National University of Singapore.

This talk will take place on Monday, December 4, 2023 at UC San Diego in the CSE Building in Room 4258 and Zoom.

Join EnCORE’s talk on “Bounded Weighted Edit Distance” with Tomasz Kociumaka, a postdoctoral researcher at the Max Planck Institute for Informatics, Germany.

This talk will take place on Monday, November 20, 2023 at UC San Diego in the CSE Building in Room 4258 and Zoom.

Join EnCORE’s talk on “Fredman’s Trick Meets Dominance Product: Fine-Grained Complexity of Unweighted APSP, 3SUM Counting, and More” with Yinzhan Xu, a PhD student studying theoretical computer science at MIT.

This talk will take place on Monday, Monday, October 23, 2023, at UC San Diego in the CSE Building in Room 4258.

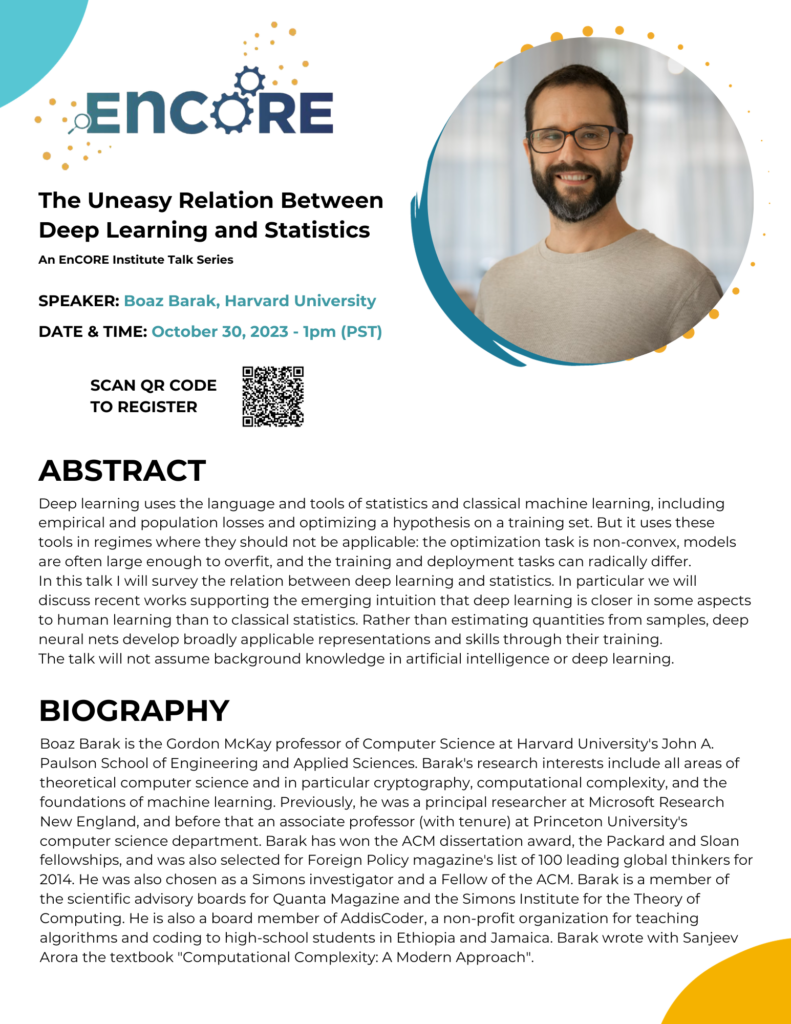

Boaz Barak is the Gordon McKay professor of Computer Science at Harvard University’s John A. Paulson School of Engineering and Applied Sciences. Barak’s research interests include all areas of theoretical computer science and in particular cryptography, computational complexity, and the foundations of machine learning. Previously, he was a principal researcher at Microsoft Research New England, and before that an associate professor (with tenure) at Princeton University’s computer science department. Barak has won the ACM dissertation award, the Packard and Sloan fellowships, and was also selected for Foreign Policy magazine’s list of 100 leading global thinkers for 2014.

Learn more about this upcoming talk here.

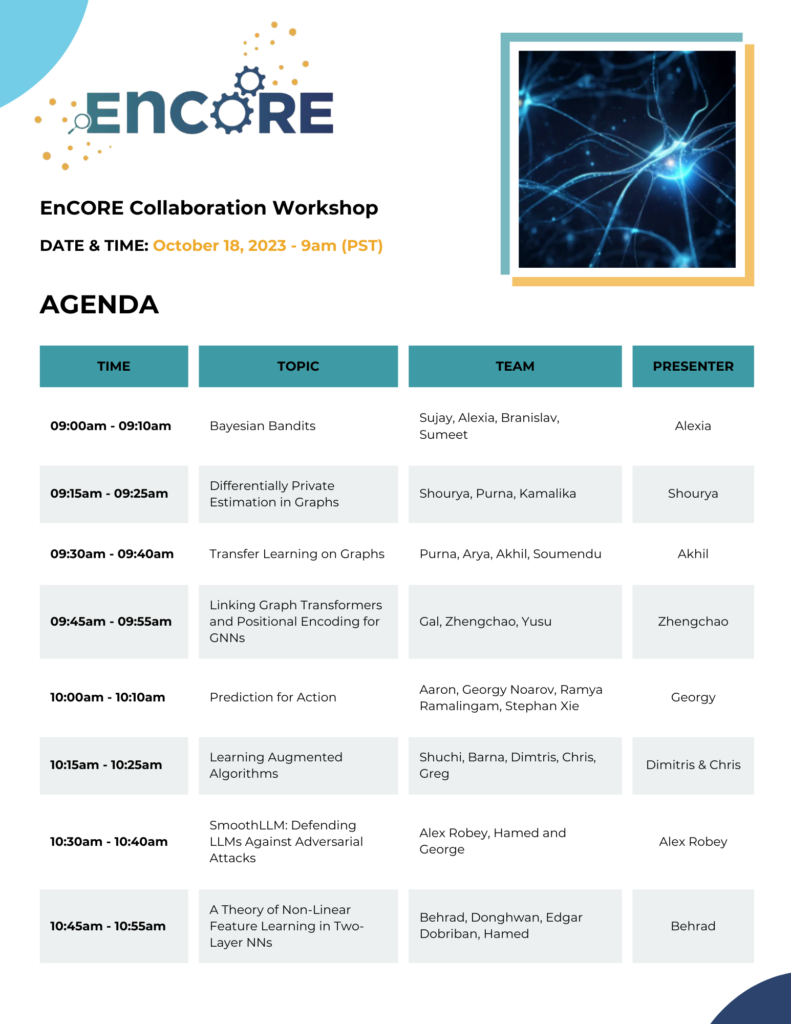

EnCORE students have teamed up to collaborate on various topics, such as augmented algorithms and large language models!

This workshop will take place on Wednesday, October 18 at 9 a.m. (PST) via Zoom.

EnCORE and the Data Science Institute (DSI) at the University of Chicago are partnering to celebrate the potential of exceptional data scientists through an intensive research and career workshop over the course of two days from Monday, November 13, 2023 to Tuesday, November 14, 2023. This workshop will take place at the University of Chicago.

Apply by September 22nd at 11:59pm CDT to be considered.

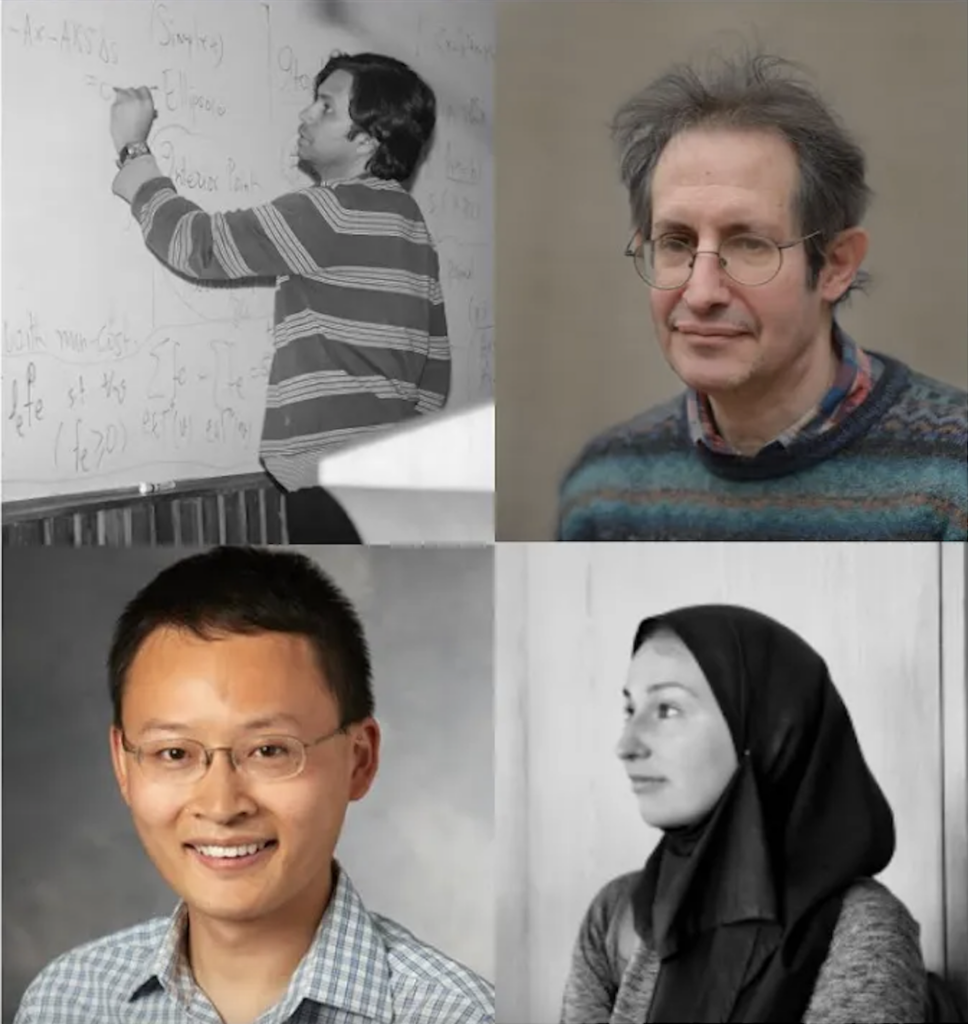

Join us and listen to the NSF TRIPODS Panel discussion on the reliability of Large Language Models (LLMs).

The panelists for this session include Marzyeh Ghassemi (MIT), James Zou (Stanford), Ernest Davis (NYU), and Nisheeth Vishnoi (Yale).

Tuesday, Sept 5, 2023 – 2pm PT (5pm ET, 10pm UTC)

EnCORE is organizing a workshop on the statistical and computational requirements for solving various learning problems from Monday, February 26, 2024 to Friday, March 1, 2024. This event aims to provide a forum to discuss the latest research and develop new ideas, as well as build bridges between different disciplines, such as applied mathematics, statistics, optimization, and theoretical computer science.

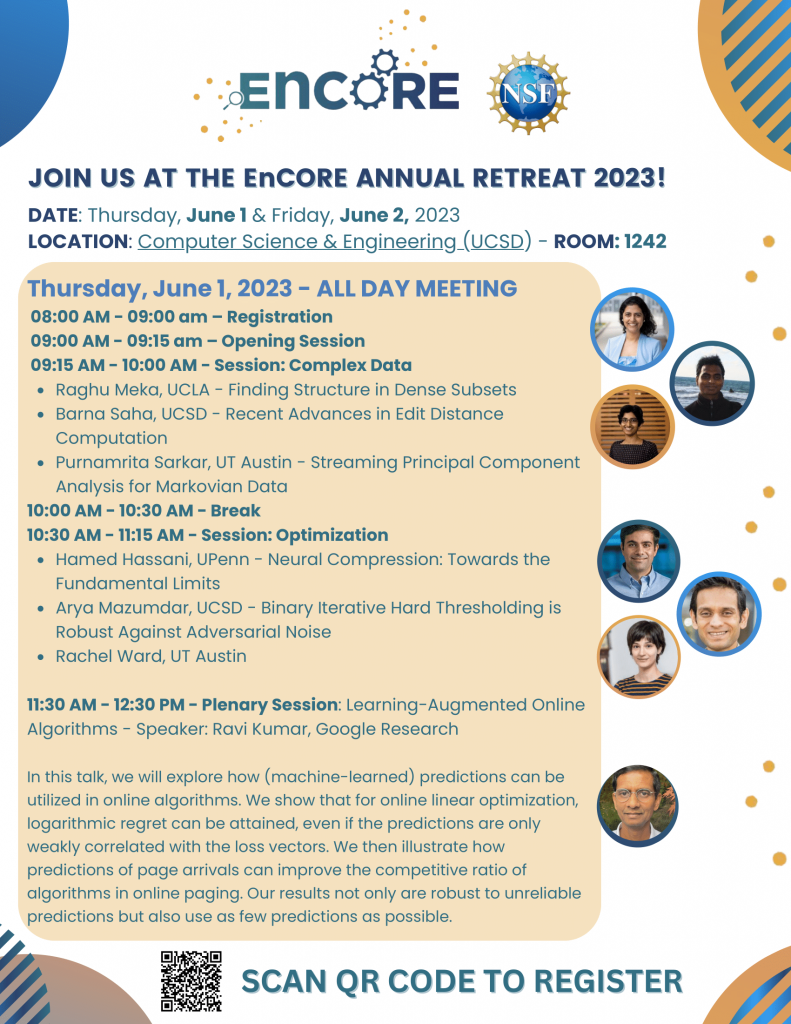

EnCORE is hosting a two-day conference from Thursday, June 1, 2023, to Friday, June 2, 2023. This event aims to unite scientists from diverse fields, providing a platform to engage with a broad range of students. The conference will be held at UC San Diego’s CSE Building in Room 1242.

Don’t miss out on this unique opportunity – secure your spot and choose your preferred tickets for each day by registering here.

TCS for All is holding its TCS for All Spotlight Workshop on Thursday, June 22nd, 2023 (2-4pm), in Orlando, Florida, USA, as part of the 54th Symposium on Theory of Computing (STOC) and TheoryFest. The workshop is open to all.

To attend the workshop in person, just show up! Otherwise, to attend remotely, registration is free, but you must register to get the zoom link.

Register for this workshop here.

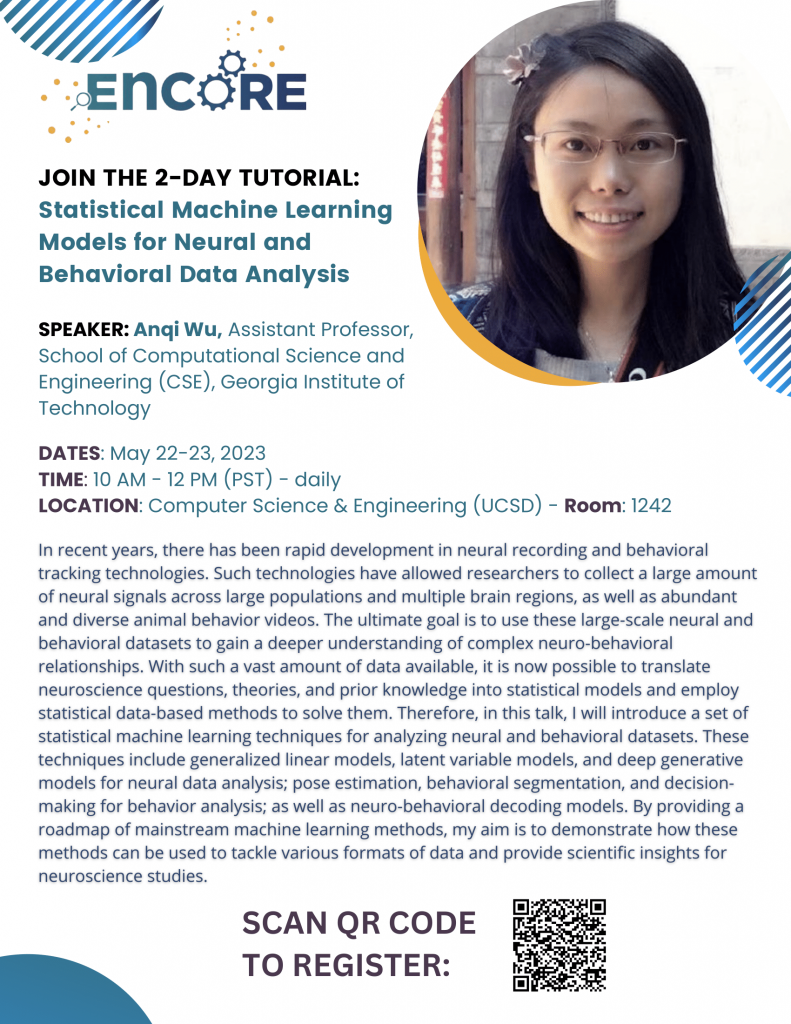

Join EnCORE’s 2-day tutorial on Statistical Machine Learning Models for Neural and Behavioral Data Analysis with Anqi Wu, an assistant professor in the Computer Science and Engineering (CSE) department at the Georgia Institute of Technology. This tutorial is geared toward Computer Science and Data Science junior graduate students with little to no experience in neuroscience.

This tutorial will take place on Monday, May 22, 2023 to Tuesday, May 23, 2023 at UC San Diego in the CSE Building in Room 1242.

Register for this tutorial here.

Learn more about the tutorial by visiting the Neuroscience Tutorial page of Events.

This workshop will address research into topics in computational complexity, cryptographic assumptions, randomness in computation, machine learning, complexity of proofs, and related topics that address issues raised by the five worlds conundrum.

This workshop will take place on Friday, May 12, 2023 to Saturday, May 13, 2023 at UC San Diego in the Computer Science and Engineering (CSE) Building. Visit wcw.ucsd.edu for more details about the event.

FinDS is an innovative program that is designed to provide high school students with a strong base in the Mathematical Foundations of Data Science over the course of 3 weeks. This program is offered both virtually and in-person.

Learn more about the program at mathfinds.ucsd.edu or visiting the FinDS page of Outreach.

Apply by June 1, 2023 to be considered.

EnCORE Institute brings together students to meet biweekly to discuss research topics in an informal setting. Join us to expand your knowledge on various subjects while meeting like-minded individuals who are also part of EnCORE.

Vladimir Braverman is a Professor of Computer Science at Rice University and a Visiting Researcher with Google Research. Previously, he was an Associate Professor in the Department of Computer Science at Johns Hopkins University. Braverman received a PhD in Computer Science at UCLA. Braverman’s research interests include efficient sublinear algorithms and their applications to machine learning and digital health.

Thursday, March 16, 2023 – 1pm PT (4pm ET, 9pm UTC)

EnCORE Institute was invited to organize a workshop on the theme of Distributed Learning and Decision Making across four sessions at the 2023 Information Theory and Applications workshop (ITA), a casual gathering for researchers that apply theory to diverse areas in science and engineering. ITA was held from Sunday, February 12, 2023 to Friday, February 17, 2023.

Amit Chakrabarti is a professor at Dartmouth College in the Department of Computer Science. His research focuses on theoretical computer science. Specifically, this includes complexity theory, data stream algorithms, and approximation algorithms. He is a recipient of the NSF CAREER Award, Karen E. Wetterhahn Award, the McLane Family Fellowship, and the Friedman Family Fellowship.

Thursday, Feb 9, 2023 – 2pm PT (5pm ET, 10pm UTC)

Amit Chakrabarti (Dartmouth)

EnCORE brings together scientists from multiple disciplines to reach a wide demography of students in this one-day mini-conference.

Monday, December 19, 2022

Register by December 15, 2022

Tickets are FREE for EnCORE Affiliated Faculty, Program Participants, all CSE and HDSI faculty and students and also for all remote participants, and $55 for Guests who are attending in person.

Omri Ben-Eliezer is an Applied Mathematics instructor at MIT who is affiliated with the Foundations of Data Science Institute. He conducted research under the guidance of Noga Alon at Tel Aviv University, Moni Naoor at Weizmann Institute, and Mandhu Sudan at Harvard University. His research interests center around the theoretical and algorithmic foundations of big data, which include sublinear time and streaming algorithms, robustness and privacy, knowledge representation, and complex networks.

Wednesday Dec 14, 2022 – 1pm PT (4pm ET, 10pm CEST, 8pm UTC)

Omri Ben-Eliezer (MIT)

Edith Cohen is a research scientist at Google and a professor at Tel-Aviv University in the School of Computer Science. Before that, she served as a member of the research staff at AT&T Labs Research and the principal researcher at Microsoft Research. Her research interests include algorithms design, data mining, machine learning, optimization, and networks. In her research, she has developed models and scalable algorithms in a variety of areas, including query processing and optimization, caching, routing, and streaming. Cohen received the IEEE Communications Society William R. Bennett Prize in 2007 and was recognized as an ACM Fellow in 2017.

Saturday, November 12, 2022 – 1pm PT (4pm ET, 10pm CEST, 8pm UTC)

Edith Cohen (Google Research / Tel-Aviv University)

David Woodruff is a professor at Carnegie Mellon University in the Computer Science Department. Before that he was a research scientist at the IBM Almaden Research Center. His research interests include data stream algorithms, distributed algorithms, machine learning, numerical linear algebra, optimization, sketching, and sparse recovery. He is the recipient of the 2020 Simons Investigator Award, the 2014 Presburger Award, and Best Paper Awards at STOC 2013, PODS 2010, and PODS, 2020. At IBM he was a member of the Academy of Technology and a Master Inventor.

Wednesday Oct 5 – 1pm PT (4pm ET, 10pm CEST, 8pm UTC)

David Woodruff (CMU)

For the first time since 2019 #DeepMath will be in-person, in November in San Diego 😎 Abstract submissions are open deadline August 15. We are looking forward for great submissions focusing on theory of deep networks https://deepmath-conference.com/submissions

@deepmath1

A virtual series that brings prominent leaders in Foundations of Data Science across Computer Science, Engineering, Mathematics, and Statistics.

Check the list of our past talks.

Subscribe to our mailing list.

Subscribe to our YouTube channel.

Follow us on Twitter.

Data Science Fall retreat will take place on December 10th, 2021 and will bring together researchers from four NSF TRIPODS Institutes, students, and postdocs. Detailed program here.

Many modern applications produce massive datasets containing a lot of redundancy, either in the form of highly skewed frequencies or repeating motifs/fragments of identical data. Recent years have seen a lot of activities on new data compression techniques, and computation over compressed data. However, there has been little cross-community interaction between theoreticians and those working with real data. Computation+Compression workshop, 2022 aims to bridge the gap by bringing together the experts from Theoretical Computer Science, and Bioinformatics community that work in this area.

In recent years, the computational paradigm for large-scale machine learning and data analytics has shifted to a massively large distributed system composed of individual computational nodes (e.g., ranges from GPUs to low-end commodity hardware). A typical distributed optimization problem for training machine learning models has several considerations. The first of these is the convergence rate of the algorithm. While the convergence rate is a measure of efficiency in conventional systems, in today’s distributed computing framework, the aspects of communication are at odds with the convergence rate. In recent years various techniques have been developed to alleviate the problem of communication bottleneck, including but not limited to quantization and sparsification, local updates, one-shot learning. The goal of this workshop is to bring these ideas together.

Past Events:

Shuchi Chawla on Foundation of Data Science Series, April 6, 2022

Eric Tchetgen Tchetgen on Foundation of Data Science Series, March 16, 2022

Computation+Compression workshop to be held on Jan 19th, 2022. Register to participate.

Piotr Indyk, MIT on Foundations of Data Science Virtual Series: Learning Based Sampling and Streaming, Jan 13th, 2022.

Data Science Annual Retreat, Dec 10th, 2021

Undergraduate Capstone Project Presentation, Dec , 2021

Nicole Immorlica, Microsoft Research, on Foundations of Data Science Virtual Series: Communicating with Anecdotes, Nov 16th, 2021

Maxim Raginsky, UIUC, on Foundations of Data Science Virtual Series: Neural SDEs: Deep Generative Models in the Diffusion Limits, Oct 21st, 2021

Christos Papadimitrou, Columbia University, on Foundations of Data Science Virtual Series: How Does the Brain begets the Mind?, Sept 23rd, 2021

Hamed Hassani, University of Pennsylvania on Foundations of Data Science Virtual Series: Learning Robust Models, May 6th, 2021

Workshop on Communication Efficient Distributed Optimization, April 9th, 2021